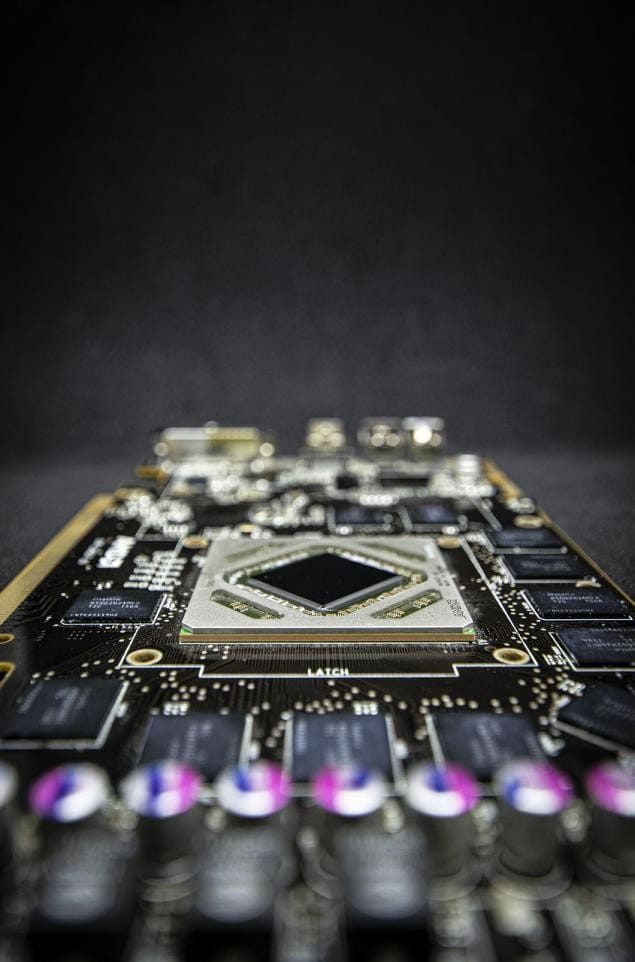

Image by Ödeldödel from Pixabay

As artificial intelligence gathers pace, becoming ever more capable and powerful, large language models are increasingly valuable. It is these LLMs that deliver the understanding and language generation abilities AI systems need to function. They also provide the basis for generative AI structures – a vital part of modern AI.

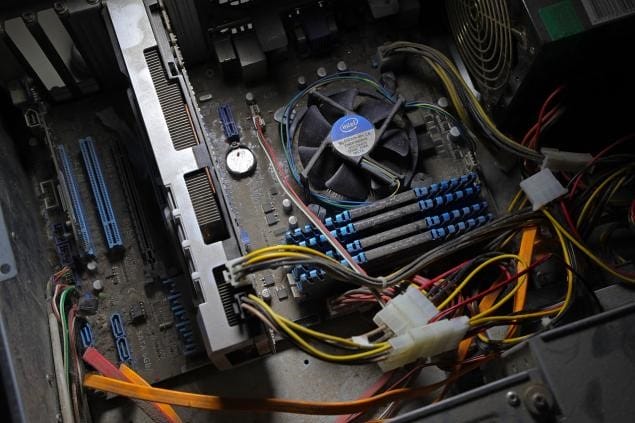

But where are these large language models coming from? Well, they need to be developed, and this means we need high performance computing systems capable of handling this development.

And at the heart of the high performance system? An LLM GPU – the computing component that underpins the entire process. In this article, we'll look at these components in more detail and discover more about the role they play in development.